We are live!

As a company, we’re excited to finally be going live with our first product. Here’s a recap of where we’ve come and where we’re going.

Where we started

The first GPU, a NVIDIA Tesla V100, and the server that I bought that sparked the creation of the company that became TensorDock

The first GPU, a NVIDIA Tesla V100, and the server that I bought that sparked the creation of the company that became TensorDock

I vividly remember scrolling through eBay on April 5th, 2020 and submitting the only bid on an NVIDIA Tesla V100 after having exchanged messages with a potential client. It was just minutes until the auction closed, and I was about to press the “bid” button with zero competitors. My arms were shaking, and I had to take a few walks around the house before I pressed the button. It was an amount of money that could have purchased four servers. I was the only bid. Compared to Bitmain Antminers or even consumer-end GPUs that I had been using to cryptocurrency mine, the Tesla V100 couldn’t guarantee any sort of return. If my customer cancelled, I would be left holding a GPU in an illiquid resale market where only other AI startups and enterprises saw the value of such cards.

The first thing that I learned was that GPU servers are very different from GPU miners. Servers need beefy RAM, CPU, and disk allocations, whereas miners that I was used to ran on the cheapest hardware available. Oh yeah, and servers need fast internet bandwidth, too!

I thought that I had made a huge mistake — why buy expensive hardware to try to capture an uncertain income source? Yes, the payouts would be larger, but what if the customer cancelled and no customer would come the next month?

So, for the next two months, it stayed a one-man show. I would handle emails that arrived at this new side project I called Dash Cloud, buy hardware when needed, and set up a server. It was too risky to grow beyond that.

In the fall of 2020, I took a big leap. I emailed over two hundred NVIDIA distributors to ask for their best pricing on GPUs and placed a bulk order through one of them. Then, I added my first two friends: Sam and Owen. Through the year, we were all still part-time (we are full-time students), but as a team, we held each other accountable and handled support on different shifts. By now, we were switching from setting up servers on request to having pre-setup servers available on-demand. That allowed us to strengthen our relationship with wholesale marketplace resellers. Things stayed like this for another year, until we made our next big leap.

TensorDock’s beta launch

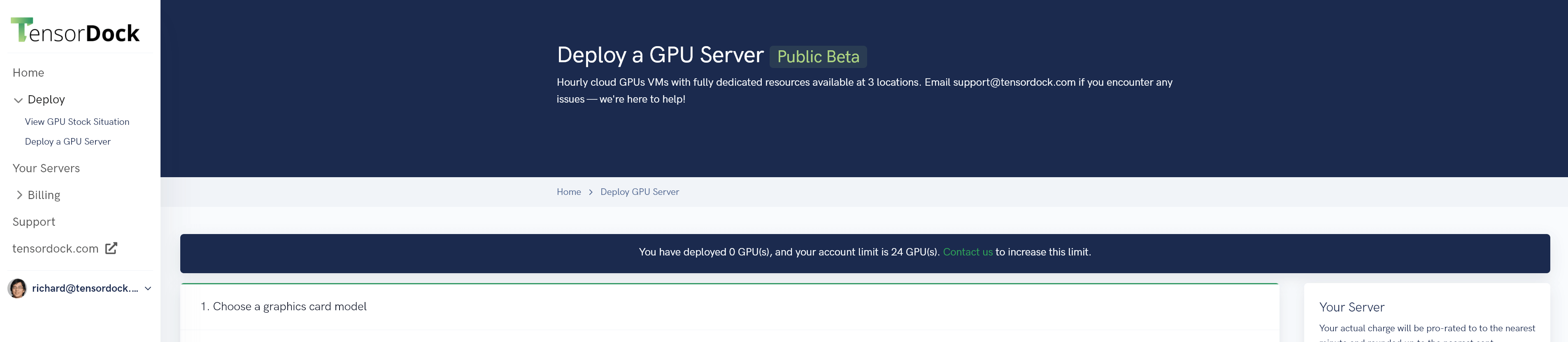

Screenshot of our console taken in December, 2021. Remember how beta-like it looked?

Screenshot of our console taken in December, 2021. Remember how beta-like it looked?

By the third quarter of 2021, manually setting up customers was taking up the vast majority of our time. So, in October, I began to work on a platform for our customers to deploy servers on-demand without our manual intervention. We launched on December 28th and answered questions on LowEndTalk and LowEndSpirit even as most others would have taken breaks. We didn’t care; we thought that we could change the computing landscape, so we worked round-the-clock to make it happen.

We used a new name, TensorDock, so that old customers could continue to use our Dash Cloud website without disruption.

Left: screenshot of our internal Grafana taken on January 28, 2022. Right: a new shipment of hardware

Left: screenshot of our internal Grafana taken on January 28, 2022. Right: a new shipment of hardware

On LowEndTalk and LowEndSpirit, we recieved a ton of feedback, which we used to continuously improve our platform. We also began scaling our software. From a few TB to beyond, we realized that we needed to operate at a large scale to truly provide reliability and value for our customers.

TensorDock Core Cloud has launched!

After exactly seven months in beta, on Thursday, we finally launched TensorDock Core Cloud to HackerNews, Reddit, and ProductHunt! We’ve also created our own animated advertisement, and we’ve also revamped our website.

Here are a few features we’re excited to have added:

- CPU-only virtual machines

- You can now deploy a CPU-only virtual machine with a fully-dedicated CPU and 4 GB of DDR4 RAM for less than $20/month! Our costs scale linearly, so the cost advantages of using TensorDock over other cloud providers becomes extremely apparent at the 100GB+ of RAM mark because other providers scale often scale prices exponentially

- Server modifications

- You can spin up a CPU server, upload your data, modify it to a GPU server to train your ML models, and then modify it back. By modifying your server to only ever use what you actually need, you can save a ton of costs :)

- Custom alerts

- You can now add custom alerts on the billing page! Never run out of credits again with your own alert thresholds.

With our software having orchestrated thousands of virtual machines and including these new features, we feel that our product has finally become enterprise-ready, so we’re removing any “beta” branding from our console’s pages.

For the next two weeks, we’re lowering Core Cloud pricing by 10% to celebrate. If we see a significant amount of additional demand, we might keep this change permanent. Subscription prices will continue to be priced as is.

Other Updates

Over the past few months, we’ve seen thousands of virtual machines being deployed on our platform. We’ve added a host of new features, grown our team to 18, and negotiated colocation rates in data centers as far as Singapore. We’re excited to also join NVIDIA Inception. NVIDIA’s ecosystem has been the keystone of our company’s business since day one — whether it’s exceedingly high-performing hardware: CPUs, DPUs, and mellanox network switches; or effective software like CUDA. With access to discounted hardware and more support, we’ll be able to propel TensorDock to the next level.

Closing words

I’m thrilled to call you a user of our platform. Over the past few months, fraud rates have been cut from 20% to zero. I think we’ve found a community that truly supports us. As we embark on a new journey and launch a marketplace in the coming weeks, we’ll be more receptive than ever for feedback. Feel free to send me an email anytime :)

Oh yeah, if you’re curious, see the inside of a data center we have hardware at: https://youtu.be/lfaj1tuOozs