Orchestrate generative AI workloads using dstack on TensorDock

TensorDock and dstack partner to offer seamless generative AI workload orchestration atop the world’s most cost-effective GPU infrastructure.

I’m thrilled to announce today that we’re an official cloud provider supported by dstack, a free-to-use open-source platform that streamlines generative AI workloads orchestration. dstack offers an intuitive CLI and API to run devevelopment environments and complete tasks, defined by code and replicable across cloud environments.

dstack for training

dstack lets you define LLM pre-training and fine-tuning jobs via CLI recipes and a Python API. You can utilize your preferred libraries or rely on provided templates. Once the task is completed, the compute instances will automatically be terminated, ensuring that you never overpay for compute.

dstack for development

dstack quickly provision interactive dev environments automatically so that you can develop more with less. And it does so based on pre-defined configurations.

dstack and TensorDock

Together, dstack and TensorDock are committed to drive the development and adoption of LLMs, and starting today, you can enjoy the benefits of dstack on TensorDock. As a GPU cloud marketplace, we aggregate the offerings of dozens of independent hosts. Suppliers compete for pricing and reliability, giving customers the most affordable, reliable, and scalable offerings by any GPU cloud. By partnering with dstack, we aim to offer customers an easier, more replicable way to deploy their generative AI training and development workloads atop our cost-effective, scalable, and reliable cloud.

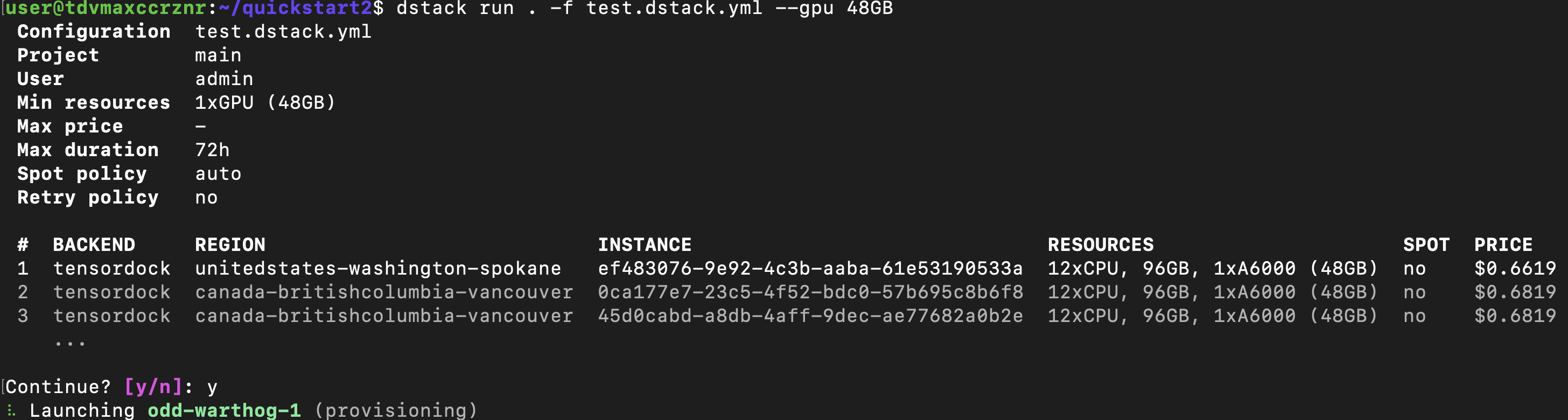

Configuring your TensorDock account with dstack is very easy. Simply generate an authorization key in your TensorDock API settings and set it up in ~/.dstack/server/config.yml file. After you restart the dstack server, you’ll be able to run dstack jobs on TensorDock’s infrastructure!

Learn more about dstack, and create an API key on TensorDock here.